Solving the Threat Modeling Bottleneck with AI Workflows

As software teams grow and projects multiply, security teams face a scaling problem. Threat modeling requests that once took days now take weeks. Some never get reviewed at all. AI-assisted threat modeling can analyze designs and produce recommendations way faster than manual methods, preventing overtaxed security teams from becoming a bottleneck.

Security practitioners should see past the naïveté of a free lunch here, though. Threat modeling is hardly a tidy algorithm, and while a sloppy hallucination-filled STRIDE analysis based on scant fluffs of technical specification may fulfill a compliance requirement, forwarding it unread to your development team while you sip a mai-tai poolside is not a recipe for success.

So we need to scale threat modeling without sacrificing quality. I see two promising approaches: developer-led threat modeling and AI-assisted threat modeling. While each is independently worthwhile, I propose these two great tastes taste great together. AI tooling may be the key to decentralized security analysis, freeing up security attention to keep recommendation quality high.

What is threat modeling and why do we do it?

The principle of "secure by design" and the practice of threat modeling are based on the idea that bad security outcomes are preventable through good judgment. Security teams aspire to trim bad timelines at the root as they "shift left" to squash nascent bad tradeoffs and oversights before code is concrete.

Design-time security analysis may be a world-tilting lever, but there's no consensus on where the handle is. I've supported organizations "threat modeling" for a couple decades and spoken with leaders of security programs of various size and scope about their practices. Here's what I've learned: it means completely different things to different (extremely smart) people.

Some folks swear by STRIDE. Others use Mozilla's Rapid Risk Assessment. Google has their approach. Trail of Bits has theirs. The Threat Modeling Manifesto distills it down to four questions. Jacob Kaplan-Moss wrote about "two scenario threat modeling". I wrote about scalable threat modeling years ago.

It's incredibly easy to get lost in the process of it: futzing with data flow diagrams, arguing about whether you've correctly applied PASTA, or deciding if your attack trees are comprehensive enough. And you can end up wasting time on non-risk reducing effort. From Microsoft in 2007:

If I have to spend 20 minutes filling out all the little boxes concerning the various bits of a threat model, doing a DFD, all to find that "Hello World" has no threats (much less any vulns), that's a waste.

All this to say: there's no one-size-fits-all, and security teams have latitude and even responsibility to tailor the various approaches to a fit for their organizational context. But as they do this, how do they know if they're doing it well?

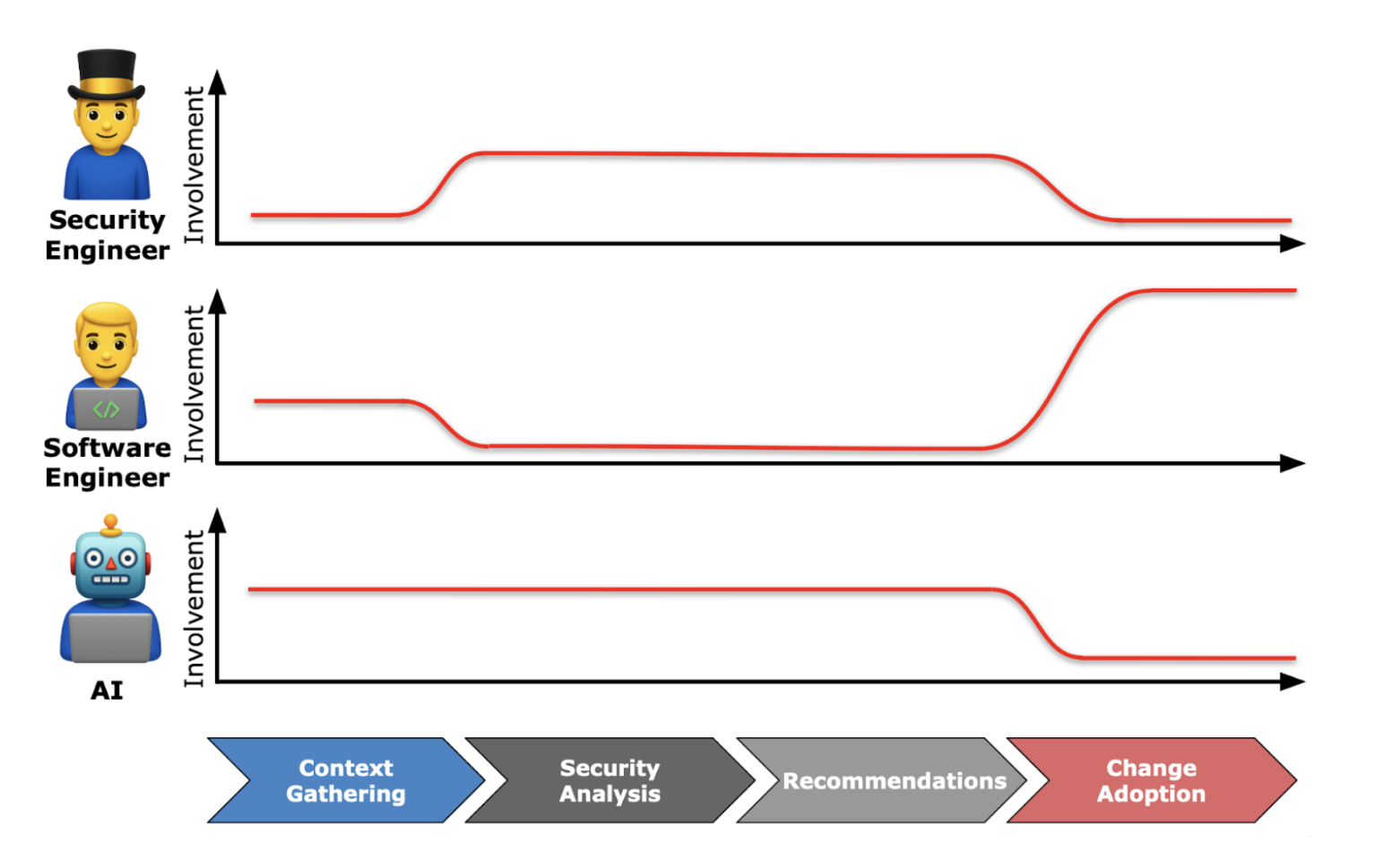

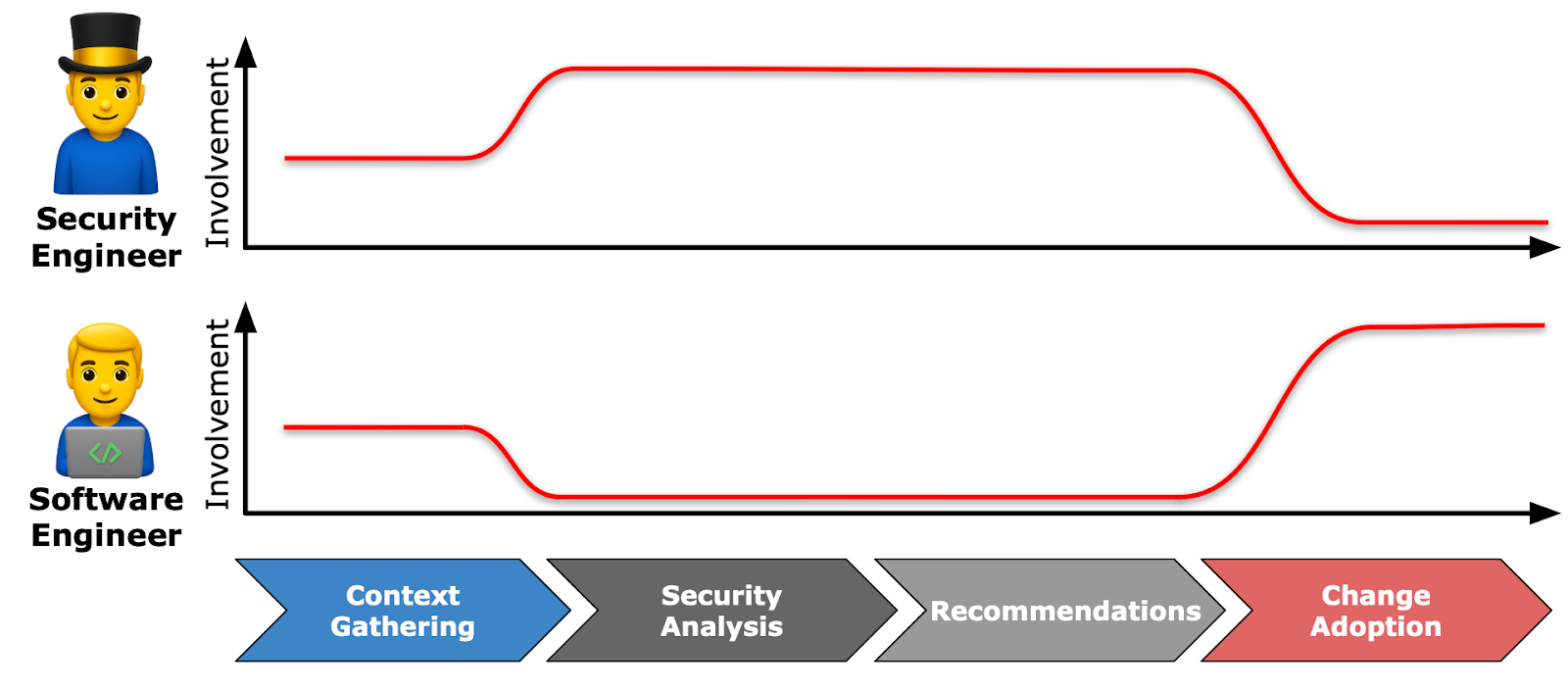

Remember - security teams hoping to reduce risk at design time need to influence the design. This means that the creation of a "report" is not a useful outcome. And perhaps surprisingly, it's not the documentation of a bunch of countermeasures the team was going to do anyways. It's the communication of new design alternatives that are considered and adopted. Think of it as a collaborative function of effort by security and software engineers:

You collaborate to take in context about what’s being built. You analyze, then handover recommendations that result in changes. You minimize the time and resources spent, and maximize the ROI of your suggestions.

Different organizations use different methodologies, some call it “secure design review” rather than “threat model”, and that's fine. But from the perspective of the function, the methodology is an implementation detail. The interface stays consistent: context in, recommendations out, changes implemented. The people building systems just need useful output they can adopt in their design. That's the full value cycle.

The scaling problem and three attempts to solve it

Even with a perfectly optimized function, threat modeling has a big constraint: security team time and effort. It becomes acute as the shipping pace quickens and attention is saturated. The increased latency may feel familiar; development teams waiting weeks or months for feedback from a security team rushing to fight fires elsewhere.

Technique one: hire more people

One technique is to grow the security team, which can work if the organization leadership gets it, but there are a few tradeoffs. One, it's hard to scale a team of smart people doing great work. Two, it costs a bunch of money. This is an OK tactic, but my sense is that a common strategy of great security leaders is to grow sub-linearly with the organization. After all, ROI is measured with a denominator that includes headcount. For security to be a competitive advantage for an organization, it doesn't only need to be effective; it needs to be efficient.

Technique two: have developers threat model

We can get rid of the limiting factor - the security analyst - by making threat modeling "self-serve". A big benefit to this approach is that developers already understand the systems they're building, so there's no need to document, meet, and interview or otherwise communicate what they're working on to the analyst. Security teams can still play a role, either handling the more complicated changes or by reviewing and approving the developer-led threat models.

It's not a new idea, and organizations who've published their experiences discuss the tradeoffs. Snowflake teams spent 2-6 hours per model with inconsistent quality and began questioning "whether they had time for security" until they started scoping out lower-risk projects. New Relic and OutSystems encountered similar challenges. It's doable, but important to note that developers might lack the expertise and motivation to think adversarially about their own work. Without security team involvement, quality and completeness are at risk.

Technique three: using AI to help threat model

Enter the new hotness: using AI to generate threat models. The idea of faster analysis and better coverage with indefatigable LLMs is compelling. This seems like a natural direction, as AI is impacting every nook and cranny of knowledge work. Numerous companies are publishing details about their approach to AI threat modeling, and product companies are springing up in the space.

But just like threat modeling itself, "AI-assisted threat modeling" is vague, and approaches that lack nuance are unlikely to produce high quality results. There are two major limitations I see with basic AI threat modeling:

Lack of extant context: Taking "tech specs" or similar developer-produced artifacts describing proposed changes and trying to perform a one-shot threat model using LLMs assumes documentation adequately describes the system in question. Without adequate understanding of the details of the change that are security-relevant, the analysis may be too generic or surface-level.

Ineffective curation of recommendations: Providing recommendations output by an LLM without a comprehensive understanding of the existing countermeasures, tradeoffs, and "taste" (i.e. does this fit our organizational values/style?) is likely to lead to a set of recommendations that do not directly result in system changes, watering down the effectiveness of the threat modeling exercise and putting the trust of the collaborative relationship at risk.

In both cases, you can miss the mark without heavy involvement from the beleaguered humans of the security team.

Applying AI to gather context and draft recommendations

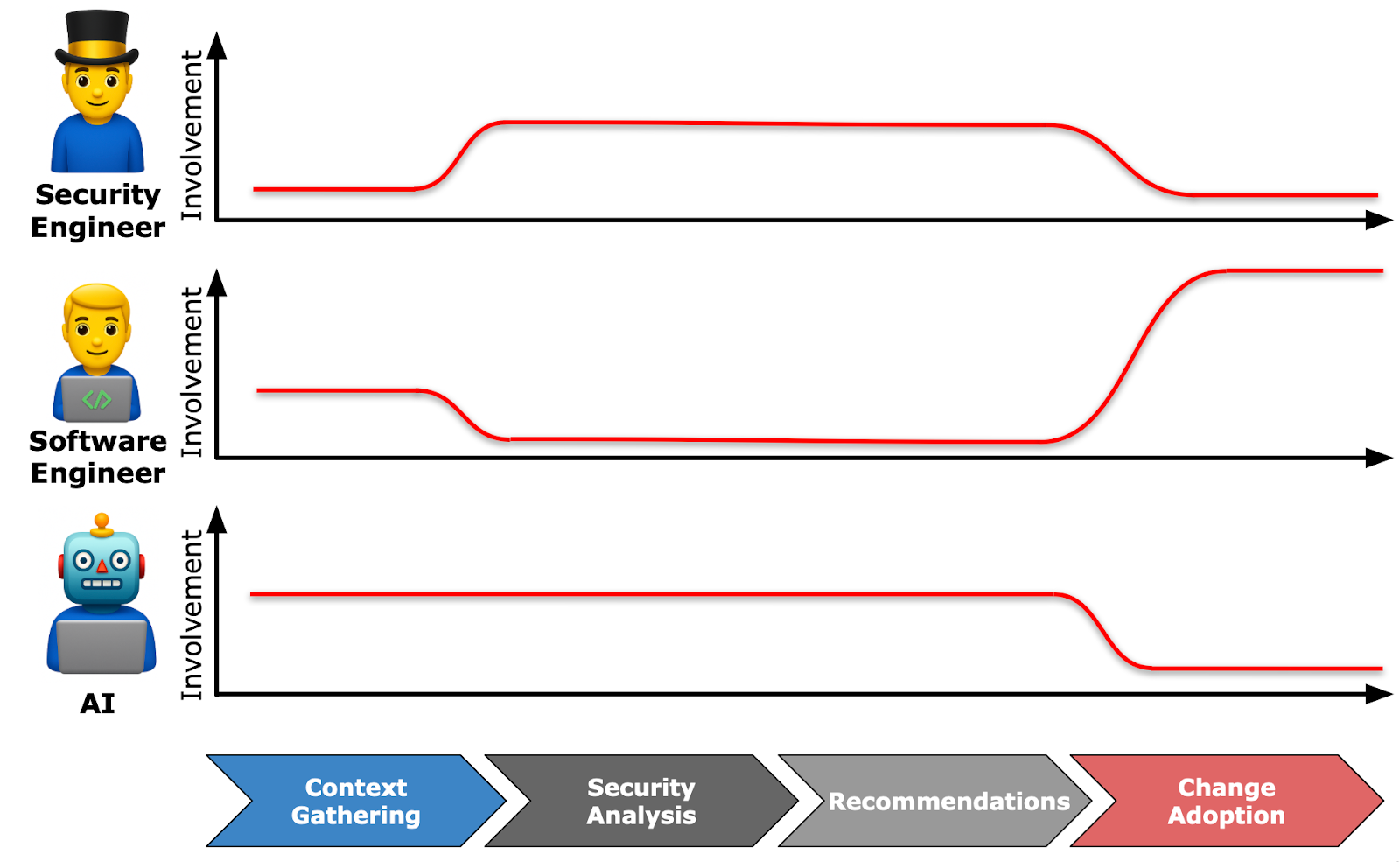

To address these issues, I think there's an opportunity to lean on developer-focused threat modeling for context gathering augmented by AI, followed by analysis performed by AI that the security team can review to validate the sensibility of the recommendations, check for missing analysis, and tweak the prompt to improve the next review.

First, developers participate in AI-led context extraction to provide all the ingredients. AI generates the draft threat model based on the supplemented data. Then, security engineers lean on AI for recommendation analysis to ensure that they are likely to be understood and accepted by developers. In each case, they’re collaborative, multi-shot sessions, but they have different agendas.

Context extraction ("So, what are you building?")

One reality of threat modeling is that design documentation is far from comprehensive. I've run threat modeling sessions where the real value wasn't applying STRIDE but sitting with the team and asking questions until we all understood what was actually being built. I've had sessions where two engineers on the same team vehemently disagreed about how their own system worked.

Fixing the documentation gap is not a hill to die on. Engineers building features know their systems intimately, but that knowledge lives in their heads, in Slack threads, in decisions made at the whiteboard. The security-relevant context (why this approach over alternatives, what happens when this external service is unavailable, who actually has access to this data) often never makes it into formal documentation.

This is why traditional threat modeling requires meetings. You need the back-and-forth. You need to ask "wait, so when you say 'authenticated users,' do you mean anyone with an account or just admins?" You need someone to say "actually, that's not quite right" when you draw an architecture diagram based on the design doc.

AI analyzing static documentation will miss all of this. But AI can potentially replicate the conversation that extracts this context. And it's much more straightforward to have developers answer questions in an LLM chatbot setting than it is to teach them about the nuances of threat modeling.

An example workflow:

- Start with whatever the developer has: partial design docs, sketches, verbal descriptions

- AI asks clarifying questions to surface missing context: "How does authentication work here?" "What happens if this service is unavailable?" "Who can access this data?"

- Multi-turn interaction that mirrors a threat modeling meeting

- Developers describe in their own words, no security framework knowledge required

- AI surfaces disagreements or gaps: "The design doc says X, but you described Y. Which is accurate?"

Instead of developers threat modeling, they’re providing the context that traditionally requires a security engineer sitting in a room asking questions. The AI handles the question-asking; developers provide the architectural knowledge they already have.

Recommendation analysis ("Here's the todo list, for your consideration")

Once you have context from developers, security teams need to analyze it. AI can help, not by replacing security judgment, but scaffolding it with an initial draft analysis. The key is that the security team isn't starting their analysis from scratch. They're validating and refining analysis, catching the subtle issues AI misses, and then vitally ensuring recommendations actually make sense to the builders they’re supporting.

An example workflow:

- AI parses the context developers provided

- AI applies security frameworks (STRIDE, attack trees, whatever)

- Flags likely issues and gaps for security team review

- Security team validates, refines, adds nuance that AI misses

- Security team ensures specific, actionable, worthwhile recommendations

This looks approximately like existing approaches to AI-assisted threat modeling, but benefits from deeper context about the design. The guidance here is ensuring that security focuses on their role as quality control for the recommendations. The details of the underlying analysis methodology are being explored by numerous organizational projects and products, and will likely vary based on culture and values.

Putting your LLMs to work

Developer-only threat modeling can fail because developers lack security expertise. AI-only threat modeling can fail because AI lacks context. Split the function into context extraction (where AI helps developers) and recommendation analysis (where AI helps security), and you address both problems.

The time savings come from security no longer spending a chunk of every review collecting context and calling out the basics. AI bridges from "here's what I'm building" to "here's what we need to know to analyze it" to "here are the specific changes that would reduce risk."

AI can make threat modeling faster, but the goal is making it scalable without becoming meaningless. AI can handle the mechanical parts of analyzing a proposed change to suggest threats and countermeasures. Us humans must preserve the team-to-team dynamic by grounding AI use in real organizational context rather than treating it as a generic oracle. That's the split that matters.

.png)

.png)